Column: The George Carlin auto-generated comedy special is everything that’s wrong with AI right now

... The special, tastefully titled “George Carlin: I’m Glad I’m Dead,” is one of the most unpleasant things ostensibly produced for entertainment purposes that I have ever sat through. It’s a stroll through an uncanny valley of Carlin’s comedy, an audio program in which a serviceable replica of the familiar raspy voice delivers “jokes” on topics from mass shootings to Taylor Swift to artificial intelligence. ...

But what bothers me uniquely about this episode is that it serves as a grim snapshot of where so much of the AI industry is at, a year into its reign as the dominant tech trend: Here we have an apparently impressive technology — we can’t know for sure, because the details are concealed in the production process, and almost surely involve ample human labor — designed not to meaningfully entertain, or to present any actual utility, but to exist wholly as a warped advertisement for itself. ...

See the full story here: https://www.latimes.com/business/technology/story/2024-01-18/column-the-george-carlin-auto-generated-comedy-special-is-everything-thats-wrong-with-ai-right-now

World’s first ‘retina resolution’ holograms have arrived, startup claims

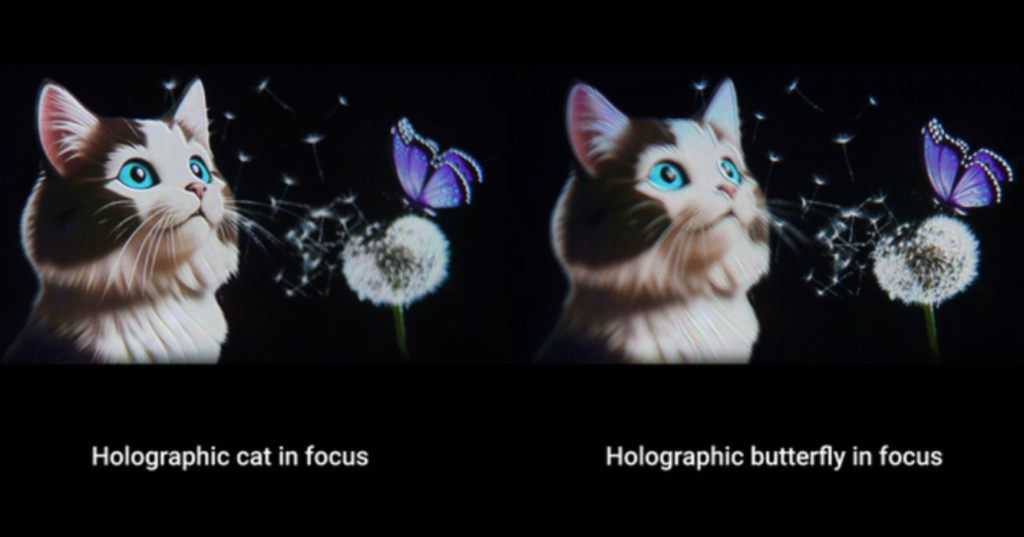

UK startup VividQ claims to have created the first-ever holograms with a “retina resolution.”

The milestone means holography can now match the resolution and real-life focus cues expected by the human eye, according to VividQ. The result is a “more natural viewing experience than ever before,” the company said. It now plans to deploy the tech in next-generation VR headsets. ...

VividQ projects the computer-generated images through high-performance 4K display hardware. Consequently, 3D holographic objects can be placed at any distance within a person’s focal range. Users can then naturally shift focus between the objects. ...

To showcase the benefits, VividQ has displayed the retina resolution holograms with a high-performance LCoS (liquid crystal on silicon) display from JVCKenwood (JKC), a Japanese electronics firm. The two companies are now collaborating to commercialise the tech. ...

See the full story here: https://thenextweb.com/news/vividq-first-retina-resolution-holograms

Disney unveils ‘HoloTile’ floor technology for omnidirectional VR experiences

Walt Disney Imagineering has unveiled the ‘HoloTile’ floor for omnidirectional VR experiences, created by Disney Research fellow Lanny Smoot.

The new technology was revealed as Smoot, a member of Walt Disney Imagineering’s research and development (R&D) division, is being inducted into the National Inventors Hall of Fame. ...

In a new Disney Parks video, Smoot explains: “It will automatically do whatever it needs to have me stay on the floor. What’s amazing about this is multiple people can be on it and all walking independently. They can walk in virtual reality and so many other things.” ...

See the full story here: https://blooloop.com/theme-park/news/disney-imaginnering-holotile-floor-lanny-smoot/?fbclid=IwAR3J31262fMA9NN7amfwmUajeVrM4U1qwerUl0zxmBrhFwQP1X6wtita-hQ

Meet qd.pi (“Cutie Pie”), will.i.am’s AI Co-Host of His AI-Themed SiriusXM Show (Exclusive)

'Will.i.am Presents the FYI Show' will debut on Jan. 25, and the musician will chat about topics like music, pop culture and technology with his artificial intelligence co-host, the first of its kind on SiriusXM. ...

The interactive show is powered by will.i.am’s FYI app, an AI-powered communication and creative collaboration tool he launched last year. ...

See the full story here: https://www.hollywoodreporter.com/news/music-news/will-i-am-ai-co-host-siriusxm-show-1235788455/

CES: Session Details the Impact and Future of AI Technology

By Phil Lelyveld

January 10, 2024

Dr. Fei-Fei Li, Stanford professor and co-director of Stanford HAI (Human-Centered AI), and Andrew Ng, venture capitalist and managing general partner at Palo Alto-based AI Fund discussed the current state and expected near-term developments in artificial intelligence. As a general purpose technology, AI development will both deepen, as private sector LLMs are developed for industry-specific needs, and broaden, as open source public sector LLMs emerge to address broad societal problems. Expect exciting advances in image models — what Li calls “pixel space.” When implementing AI, think about teams rather than individuals, and think about tasks rather than jobs.

Rajeev Chand, partner and head of research at Wing Venture Capital in Palo Alto, moderated the discussion.

From left to right: Rajeev Chand, Dr. Fee-Fei Li, Andrew Ng

Will the Current AI Hype Cycle Lead to Another AI Winter?

Ng responded that because AI is a general purpose technology, like electricity, it has many use cases and will continue to grow. Li added that media coverage will go in waves, but this is a “deepened horizontal technology” that is a true transformative force in the next digital revolution. It is changing the very fabric of our societal, political and economic landscape.

Predictions for the Near Future

We are at the verge of exciting advances in pixel space, Li suggested — a shift from large language models to image models. Ng added that this is a shift in both creating images and analyzing images for any situation where you have a camera (example: for self-driving cars).

Public sector AI, or open source AI, will be better resourced and develop alongside of private sector AI, said Li. Open source LLMs will reach the level of closed LLMs, but the development of the two will diverge because they will be developed with very different data sources. The closed source LLMs will deepen with industry-specific knowledge, while the open source LLMs will broaden as they address wider societal matters.

Autonomous agents that can plan and execute a sequence of actions in response to a request are barely working now, but Ng expects significant advances in the near future. Li respectfully requested changing the language from autonomous agent to assistive agent. As the tech rolls out, long-tail results matter and human intervention is required to catch errors such as AI hallucinations. Part of the work can be autonomous, but part of the work must be collaborative with humans.

Human-Centered AI (HAI): Running AI on your laptop is possible, even though it is not as powerful as a full LLM. Ng believes that manufacturers will market laptops powerful enough to run AI locally, and that will trigger another wave of sales as companies and consumers upgrade.

Guidelines for Implementing AI

Li stressed the importance of distinguishing between replacing jobs and replacing tasks. For example, a nurse’s 8-hour shift involves hundreds of tasks, she said, and some of them can be AI-assisted.

Li described the levels of the implementation problem. There is understanding of the data versus the decision-making versus the intention. We are getting very good at understanding patterns in the data. The second, decision-making, is much more nuanced. We are just scratching the surface in AI’s understanding of intention.

Look at ‘team’ rather than the ‘individual,’ and look at the ‘task’ rather than the ‘job,’ Ng recommended. Assess what can be augmented by AI with a clear ROI (return on investment). He stressed that the highest ROI task is often not obvious.

It may be tempting to let AI read an X-ray instead of a radiologist, but the highest ROI for AI implementation may be gathering patient data. He has seen that it is often niche tasks specific to an industry rather than an obvious general tasks that benefit most from AI implementation.

Another way to assess where AI adds value is to look where you have the most good-quality data, said Li. If you can discern repeatable patterns in the data, then the patterns can be actionable.

Video of the 40-minute panel — “Great Minds, Bold Visions: What’s Next for AI?” — is available on the CES site.

See the original post here: https://www.etcentric.org/ces-session-details-the-impact-and-future-of-ai-technology/

CES: VW Press Event Emphasizes a Future Transformed by AI

By Phil Lelyveld

January 9, 2024

Volkswagen’s CES press conference on Monday gave us a window into what we expect to see during this week’s CES 2024. The presentation centered entirely around artificial intelligence. VW has partnered with Cerence to speed the integration of AI tech into their vehicles’ in-cabin experience. The implementation touches on combining AI with spatial web capabilities. And VW has worked to make the in-car experience seamlessly compatible with AI-enhanced in-home and mobile device experiences that consumers are embracing. Not once during the presentation did they mention anything about car design or performance other than how it relates to AI implementation.

VW will be adding ChatGPT to the voice control system in all of its new models, including internal combustion engine models. Their pitch is two-fold: first, democratizing technology is in VW’s DNA — and second, AI is relevant to their customers, who expect intuitive experiences and human-like interactions in their cars similar to the ones in their homes and on their phones.

Because human language is very complex, VW has partnered with Cerence, which specializes in automotive AI integration. Chat Pro, Cerence’s implementation of AI with the existing VW IDA voice assistant, seamlessly responds to questions, routes car-specific voice commands, and allows VW to feed the system with customized information. It is integrated into the infotainment, navigation and climate control systems.

The live demo covered a number of areas. When the person sitting in the front passenger seat said “Hello IDA. Turn on the temperature to 74 degrees,” IDA responded with “All right. I’m setting the temperature in the right front area to 74.0 degrees.” Cerence audio AI detected the location of the speaker and provide an appropriate location-specific response. Think of this as the pairing of AI with spatial audio, which will evolve over time to spatial web implementations.

In another situation, you can tell IDA that you need medicine, or you want to buy a phone charger, and it will respond with a list of and map to nearby stores that sell the item.

Finally, they demonstrated in-car natural language search and voice response of any general knowledge query by asking it to explain a student’s homework assignment. Cerence CTO Iqbal Arshad said the system is “safe, secure, and instant.” Only general knowledge questions will be routed up to the LLM, he noted. Everything else will be processed in-car for low latency.

The AI assistant will be offered in Europe in the Golf, Passat, Tiguan, ID.3, ID.4, ID.5, and ID.7 starting second quarter 2024. They have not yet decided when to make it available in the US market.

The longterm goal, Cerence CEO Stefan Ortmanns explained, is to work with VW to evolve the system from an in-car assistant to a human-like companion.

They closed the event by asking IDA to provide closing remarks, which was effectively a general demo of ChatGPT and text-to-voice rather than anything related to VW, thereby reinforcing the idea that this year AI will be the star of CES.

The Volkswagen Newsroom offers details on its integration of ChatGPT. The 20-minute CES presentation is available on YouTube.

See the original post here: https://www.etcentric.org/ces-vw-press-event-emphasizes-a-future-transformed-by-ai/

CES: Will.i.am Discusses the Intersection of Music and Tech

By Phil Lelyveld

January 10, 2024

Musician will.i.am of the Black Eyed Peas — who is also a noted technologist, entrepreneur, investor and philanthropist — discussed his work with Mercedes-AMG, why he attends the CES conference each year in Las Vegas, and his vision of the future. In 2022 he was asked by Mercedes to reimagine a vehicle. He loves pattern-matching, he said, and seeing how things align. After developing ideas with his team and auctioning off the working prototype WILL.I.AMG to raise funds for his inner-city education philanthropy, he went back to Mercedes with a simple but powerful pitch with a focus on audio.

Car passengers are accustomed to the roar of the internal combustion engine as a car’s ambient sound. Electric vehicles are either silent rides, or they have artificial sounds pumped through the speakers to correspond to normal driver activities like acceleration and braking.

Our experience with music has been how it was captured on lacquer. Unchanging and repeatable. What if you captured data from the car’s sensors — accelerometer, gyroscope, compass, brakes, etc. — and use it to create a real-time audio driving experience?

He pulled a team together on his own and developed MBUX Sound Drive, “a fusion of cutting-edge technology and musical artistry, raising the Mercedes-AMG in-car entertainment experience to an even higher level,” which is being demonstrated at CES 2024.

The future of in-car music, will.i.am said, is real-time live music generation. Music unfolds and gets remapped and rearranged based on what you do. Even if you have a fixed commute, there are differences every day in how you wait at a light, hit a bump, or weave in traffic. It will turn your daily commute into a new experience every day.

It will turn drivers into composers the way Instagram turned people into photographers. Professional musicians could sell pieces to be reworked by the car’s motion, and drivers could capture and share their driving compositions.

Will.i.am comes to CES to see “what the big boys are afraid to put out fast.” He primarily looks at the small booths where he can find great ideas presented by “big brains with small budgets.” But he also has seen amazing ideas in prototype demos from big companies that won’t be put into products until Legal sees how the public responds to them.

Will.i.am has been working in AI since at least 2015, when his team prototyped a smartwatch and later implemented their AI into Beats headphones. He linked AI to the term Abracadabra. Folk etymologies attribute it to ancient Greek or Latin words meaning “I will create as I speak.” Historically, it has been used for incantations, but it also echoes how we interact with AI algorithms, he said. Just as abracadabra incantations summoned change, he proposed that AI will overturn old industries and create new ones.

The people who will create those new industries, he posited, are the downtrodden who will use AI to learn and advance from their oppression — like the people digging up the minerals in Africa and South America that are used to make consumer electronics. The future isn’t “Terminator” with one global OS and AI. It is “Star Wars” with multiple robots, multiple operating systems, and multiple AIs.

AI can already be your genie for information, he suggested. As AI spreads, it will be everyone’s genie for aspirations.

See the original post here: https://www.etcentric.org/ces-will-i-am-discusses-the-intersection-of-music-and-tech/?fbclid=IwAR1fo_133W1W_ITDFvkiyq7VFZzY9zkKG6seoyq1-Rk6YjpdsJaR-6dxsfM

CES: Panelists Weigh Need for Safe AI That Serves the Public

By Phil Lelyveld

January 16, 2024

A CES session on government AI policy featured an address by Assistant Secretary of Commerce for Communications and Information Alan Davidson (who is also administrator of the National Telecommunications and Information Administration), followed by a discussion of government activities, and finally industry perspective from execs at Google, Microsoft and Xperi. Davidson studied at MIT under nuclear scientist Professor Philip Morrison, who spent the first part of his career developing the atomic bomb and the second half trying to stop its use. That lesson was not lost on Davidson. At NTIA they are working to ensure “that new technologies are developed and deployed in the service of people and in the service of human progress.”

There is no better example of the impact of technology on humanity than the global conversation we are having now around AI. Davidson said that we will only achieve the promise of AI if we address the real risks that it poses today — including safety, security, privacy, innovation, and IP concerns. There is a strong sense of urgency across the Biden administration and in governments around the world to address these concerns.

He described President Biden’s executive order (EO) on AI. Directed by the EO, NIST is establishing a new AI safety institute, the Patent and Trademark Office is exploring copyright issues, and NTIA is working on AI accountability and the benefits and risks of openness. Governments, businesses, and civil society are stepping up to engage in the dialogue that will shape AI’s future.

U.S. government activities were discussed by Stephanie Nguyen, CTO of the Federal Trade Commission, and Sam Marullo, counselor to the Secretary of Commerce. Nguyen explained that the FTC’s mission is to protect against unfair, deceptive, and anticompetitive acts and practices. There are “no AI exemptions on the books,” she said.

The President’s EO on AI aspires to safe, secure, and trustworthy AI. Both the FTC and Department of Commerce are working to build the best multidisciplinary subject-matter-expertise teams to address key aspects of this challenge. Those teams will include government, private sector, and civil society representation.

Open questions that the panel discussed include how AI will impact establishing provenance, ‘know your customer’ models, and patent and copyright issues. There is bipartisan interest in Congress to do “something,” Marullo said, but discussions are in their very early stages.

Industry perspective was articulated by Xperi VP of Marketing David McIntyre, Google Global Cloud AI Policy Lead Addie Cooke, and Microsoft Senior Director for AI Policy Danyelle Solomon.

McIntyre made a key point about AI and regulation when he said that AI is not a fundamentally new problem for the agencies. It is simply a new tool to be applied to the use cases for which the agencies have already developed regulations. The agencies should focus on the end goal of the use cases, he suggested, and identify the gaps and holes that AI introduces, and develop regulations to address these issues rather than develop a separate AI regulatory regime.

Cooke was heartened to hear the FTC suggest that a diversity of people, skills, and ideas is important for policy development. She stressed the need to coordinate standards development nationally and internationally, rather than have individual jurisdictions develop their own local regulations. She noted that the first ISO standard for AI (42001) was recently published, and NIST has put out AI standards. At the federal level, Solomon listed a number of bills being developed for which she has a positive opinion.

“Something I’m sure we all feel pretty strongly about,” said McIntyre, “is making sure you’re regulating the use-case rather than the underlying technology.” How do you make sure your AI rules and safeguards are part of the engineering mindset and then how do you build them into the test framework? How do you incorporate tests for potential risks at a micro level so they are as integral to the QC process as any other feature that is prone to attack?

The President’s EO on AI set up the NIST’s Artificial Intelligence Safety Institute with a proposed $10 million budget, and a pilot program through the National Science Foundation and partners for a National Artificial Intelligence Research Resource (NAIRR) that will establish a shared national research infrastructure. Solomon called these critical resources and expressed her support for them.

What stood out in the EO for McIntyre was the comment that AI is not an exception or something new. The agencies’ fundamental rule-making roles continue. “AI is a tool that achieves an end, and you’re regulating that end,” he said.

Cooke added that privacy regulators need to remind players in their jurisdictions that the rules around privacy, security and other issues have not changed. It doesn’t matter what the technology is. You have to respect the rules and regulations.

Solomon called for a national privacy law. The three panelists agreed that national policies and laws on many of these key issues, rather than state level laws, would be desirable.

See the original post here: https://www.etcentric.org/ces-panelists-weigh-need-for-safe-ai-that-serves-the-public/

US university enrolling AI-generated students to participate in lessons and assignments

The project is a way to help the university learn how to make education more accessible to all

A Michigan university is believed to be the first in the US to enrol artificial intelligence students onto its classes.

The AI students won’t have a physical, robotic form but will be attending classes virtually.

Ferris State associate professor Kasey Thompson said the two virtual students will tune in to lectures, interact in hybrid classes, and complete assignments.

...

Thompson told MLive: “Like any student, our hope is that they continue their educational experience all the way up as far as they can go, through their PhD.

“But we are literally learning as we go, and we’re allowing the two AI students to pick the courses that they’re going to take. ...

See the full story here: https://www.standard.co.uk/news/tech/us-artificial-intelligence-enrol-university-students-b1132027.html

The Right Way to Regulate AI

... The United States does not need so many new AI policies. It needs a new kind of policymaking. ...

The problem with reaching for a twentieth-century analogy is that AI simply does not resemble a twentieth-century innovation. ...

Instead of reaching to twentieth-century regulatory frameworks for guidance, policymakers must start with a different first step: asking themselves why they wish to govern AI at all. Drawing back from the task of governing AI is not an option. ...

Technologies that went undergoverned are now hastening democratic decline, intensifying insecurity, and eroding people’s trust in institutions worldwide.

But when tackling AI governance, it is crucial for leaders to consider not only what specific threats they fear from AI but what type of society they want to build. ...

The Biden administration has begun to make moves to apply the same approach to AI. In October 2022, the White House released its Blueprint for an AI Bill of Rights, which was distilled from engagement with representatives of various sectors of American society, including industry, academia, and civil society. The blueprint advanced five propositions: AI systems should be safe and effective. The public should know that their data will remain private. The public should not be subjected to the use of biased algorithms. Consumers should receive notice when an AI system is in use and have the opportunity to consent to using it. And citizens should be able to loop in a human being when AI is used to make a consequential decision about their lives. The document identified specific practices to encode public benefits into policy instruments, including the auditing, assessment, “red teaming,” and monitoring of AI systems on an ongoing basis. ...

If policymakers return to first principles such as those invoked in the AI Bill of Rights when governing AI, they may also recognize that many AI applications are already subject to existing regulatory oversight. ...

It is much less common in the world of policymaking. But NIST’s use of policy versioning will permit an agile approach to the development of standards for AI. NIST also accompanied its framework with a “playbook,” a practical guide to the document that will be updated every six months with new resources and case studies. ...

Democratic leaders must understand that disrupting and outpacing the regulatory process is part of the tech industry’s business model. Anchoring their policymaking process on fundamental democratic principles would give lawmakers and regulators a consistent benchmark against which to consider the impact of AI systems and focus attention on societal benefits, not just the hype cycle of a new product. ...

AI governance need not be a drag on innovation. Ask bankers if unregulated lending by a competitor is good for them. ...

See the full story here: https://www.foreignaffairs.com/united-states/right-way-regulate-ai-alondra-nelson?utm_source=substack&utm_medium=email

Pages

- About Philip Lelyveld

- Mark and Addie Lelyveld Biographies

- Presentations and articles

- Tufts Alumni Bio