How the Magic Leap 2 is Powering the New Mercedes Vision 111

... Magic Leap’s latest solution aims to bypass traditional augmented dashboards with its immersive AR goggles. With a wide field of view (FoV), the head-mounted display (HMD) offers drivers a trove of features. ...

The unprecedented concept car aims to combine AR overlays with an intuitive HUD, complete with a 70-degree FoV. This offers instantaneous data on road conditions and streamlines components for the vehicle by eliminating dashboards or satnav systems.

Offering similar vehicle feedback as the Vision 111, Magic Leap’s solution also hopes to increase road safety and scale up the future potential of augmented automotive interfaces. This additionally offers drivers low latency, real-time hand and eye-tracking solutions. ...

See the full story here: https://www.xrtoday.com/augmented-reality/how-the-magic-leap-2-is-powering-mercedes-vision-111/

The Future Is Now: Innovative Ways Virtual Reality Is Revolutionizing Healthcare Marketing

... In the “Migraine Experience”, VR was used to educate the general public on the severity of migraines – they’re “more than just a headache”. People who don’t usually get migraines had the opportunity to experience the common symptoms first-hand – including, blurred vision, disorientation, and sensitivity to sound and light. With their new medication, GlaxoSmithKline also made sure to establish themselves as the solution to the pervasive problem of migraines (currently affecting 39 million people in the United States). Participant reactions were also filmed and compiled into an impactful marketing video that’s been viewed on social media over twelve million times. ...

See the full story here: https://www.healthcareittoday.com/2023/07/31/the-future-is-now-innovative-ways-virtual-reality-is-revolutionizing-healthcare-marketing/

Cryptography may offer a solution to the massive AI-labeling problem

... There’s a big problem, though: identifying material that was created by artificial intelligence is a massive technical challenge. The best options currently available—detection tools powered by AI, and watermarking—are inconsistent, impermanent, and sometimes inaccurate. (In fact, just this week OpenAI shuttered its own AI-detecting tool because of high error rates.) ...

But another approach has been attracting attention lately: C2PA. Launched two years ago, it’s an open-source internet protocol that relies on cryptography to encode details about the origins of a piece of content, or what technologists refer to as “provenance” information.

The developers of C2PA often compare the protocol to a nutrition label, but one that says where content came from and who—or what—created it. ...

The project, part of the nonprofit Joint Development Foundation, was started by Adobe, Arm, Intel, Microsoft, and Truepic, which formed the Coalition for Content Provenance and Authenticity (from which C2PA gets its name). Over 1,500 companies are now involved in the project through the closely affiliated open-source community, Content Authenticity Initiative (CAI), including ones as varied and prominent as Nikon, the BBC, and Sony. ...

The major media platform Shutterstock has joined as a member and announced its intention to use the protocol to label all its AI-generated content, including its DALL-E-powered AI image generator. ...

What is C2PA and how is it being used?

Microsoft, Intel, Adobe, and other major tech companies started working on C2PA in February 2021, hoping to create a universal internet protocol that would allow content creators to opt in to labeling their visual and audio content with information about where it came from. (At least for the moment, this does not apply to text-based posts.)

Crucially, the project is designed to be adaptable and functional across the internet, and the base computer code is accessible and free to anyone. ...

More specifically, it works by encoding provenance information through a set of hashes that cryptographically bind to each pixel, says Jenks, who also leads Microsoft’s work on C2PA. ...

C2PA offers some critical benefits over AI detection systems, which use AI to spot AI-generated content and can in turn learn to get better at evading detection. It’s also a more standardized and, in some instances, more easily viewable system than watermarking, the other prominent technique used to identify AI-generated content. The protocol can work alongside watermarking and AI detection tools as well, says Jenks. ...

That said, provenance information is far from a fix-all solution. C2PA is not legally binding, and without required internet-wide adoption of the standard, unlabeled AI-generated content will exist, says Siwei Lyu, a director of the Center for Information Integrity and professor at the University at Buffalo in New York. “The lack of over-board binding power makes intrinsic loopholes in this effort,” he says, though he emphasizes that the project is nevertheless important. ...

What’s more, since C2PA relies on creators to opt in, the protocol doesn’t really address the problem of bad actors using AI-generated content. And it’s not yet clear just how helpful the provision of metadata will be when it comes to media fluency of the public. Provenance labels do not necessarily mention whether the content is true or accurate. ...

Ultimately, the coalition’s most significant challenge may be encouraging widespread adoption across the internet ecosystem, especially by social media platforms. ...

The cruellest show on TV? Deep Fake Love goes too far with AI

...

At the end of each day, one half of each couple is shown videos of their partner kissing or behaving sexually with the singles.

Watching the reactions of the individuals who are seeing their partners cheating on them and breaking their already fragile trust makes for gutting viewing - but it’s not real.

In fact, it’s not until after the couples are played the traumatic videos - many of them visibly devastated - that they’re told the films could be fake.

Host Raquel Sánchez Silva explains to the participants that the scenarios their partners are being unfaithful in are mostly false, altered with deep fake technology.

With that, the game is afoot. ...

It turns out there’s an €100,000 prize at stake but it isn’t awarded on the basis of fidelity or trust. Instead, the winners will be the couple who are able to decipher the largest number of videos as either real or deep fake. ...

See the full story here: https://www.euronews.com/culture/2023/07/25/the-cruellest-show-on-tv-deep-fake-love-goes-too-far-with-ai

At Wagner’s Festival, New Technology Reveals a Leadership Rift

The American director Jay Scheib was looking at a bank of monitors inside the Bayreuth Festival Theater on a recent afternoon.

He was rehearsing his new production of Wagner’s “Parsifal,”which opens the storied Bayreuth Festival on Tuesday, and as performers circled a large metallic monolith onstage, the screens showed three-dimensional flowers floating through blank space — psychedelic animations that will come to life for audience members who see them with augmented-reality glasses. ...

Scheib’s production is one of the most ambitious, and high-profile, attempts to incorporate augmented reality into opera performance. But it also caps months of tumult at Bayreuth, after plans to outfit nearly 2,000 audience members with the glasses for each performance were downscaled because of an apparent money dispute between the festival’s artistic and financial leadership. The compromise, in which only 330 attendees will be provided with the glasses to experience the production’s signature flourishes, has left many fuming, and concerned that internal conflicts at one of the most important events in opera were undermining its relevance. ...

The use of the technology, Scheib said, was in keeping with Wagner’s own way of approaching opera. “He carried out so many innovations, with lighting and architecture,” he added. “Ultimately, he wanted the theater to completely disappear.” ...

See the full story here: https://www.nytimes.com/2023/07/24/arts/music/parsifal-augmented-reality-bayreuth-rift.html

Venice Immersive 2023: Virtual Reality Lineup (Venezia 80)

PhilNote: this article contains brief descriptions of the entries as well as some previews.

See the full story here: https://loudandclearreviews.com/venice-immersive-2023-virtual-reality-lineup-venezia-80/

How Do the White House’s A.I. Commitments Stack Up?

Overall, the White House’s deal with A.I. companies seems more symbolic than substantive. There is no enforcement mechanism to make sure companies follow these commitments, and many of them reflect precautions that A.I. companies are already taking.

Still, it’s a reasonable first step. And agreeing to follow these rules shows that the A.I. companies have learned from the failures of earlier tech companies, which waited to engage with the government until they got into trouble. In Washington, at least where tech regulation is concerned, it pays to show up early.

Commitment 1: The companies commit to internal and external security testing of their A.I. systems before their release.

Commitment 2: The companies commit to sharing information across the industry and with governments, civil society and academia on managing A.I. risks.

Commitment 3: The companies commit to investing in cybersecurity and insider-threat safeguards to protect proprietary and unreleased model weights.

Commitment 4: The companies commit to facilitating third-party discovery and reporting of vulnerabilities in their A.I. systems.

Commitment 5: The companies commit to developing robust technical mechanisms to ensure that users know when content is A.I. generated, such as a watermarking system.

Commitment 6: The companies commit to publicly reporting their A.I. systems’ capabilities, limitations, and areas of appropriate and inappropriate use.

Commitment 7: The companies commit to prioritizing research on the societal risks that A.I. systems can pose, including on avoiding harmful bias and discrimination and protecting privacy.

Commitment 8: The companies commit to develop and deploy advanced A.I. systems to help address society’s greatest challenges.

See the full story here: https://www.nytimes.com/2023/07/22/technology/ai-regulation-white-house.html

Five Takeaways from the U.S. Senate Subcommittee on Intellectual Property’s AI and Copyright Hearing

On July 12, 2023, the U.S. Senate Committee on the Judiciary’s Subcommittee on Intellectual Property held its second hearing on artificial intelligence (AI) and intellectual property (IP). The first hearing, held on June 7, 2023, focused on AI’s implications for patent law, while this second hearing centered on copyright issues. ...

Front and center was the thorny issue of training generative AI models. ...

Accordingly, the senators focused their questioning on mainly five topics, providing a glimpse of what potential federal legislation could look like:

- Need for New Federal Legislation?

- Licensing for Training, and Questions of Opt-Out and Opt-In Policies

- Fair Use

- Comparative International Standards

- Consumer Disclosures

While the senators certainly seemed eager in their questions to understand the AI landscape and what Congress can do to protect IP and creators’ rights, how serious Congress is about enacting federal legislation to protect the creative industry and regulate generative AI remains to be seen. It also remains an open question how these new proposals might interact with existing enforcement tools under copyright law, the Lanham Act, and state laws. ...

See the full story here: https://www.jdsupra.com/legalnews/five-takeaways-from-the-u-s-senate-6730697/

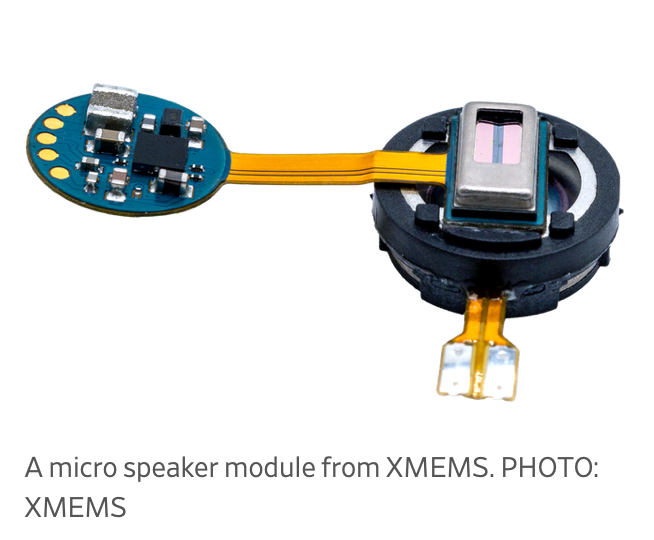

Exotic New Silicon-Based Speakers Are Coming to Next-Generation Earbuds

The way humans reproduce recorded sound could change more in the next decade than it has in the past century.

What’s coming are solid-state speakers, etched from wafers of ultrapure silicon—like microchips. That means they operate like nothing available today—and also that they have capabilities that no existing sound-reproduction system can match. ...

One recipient of prototype in-ear monitors—the kind of high-fidelity earbuds professionals use when mastering musical tracks—is Brian Lucey. A mastering engineer of nine Grammy award winners, Lucey told me that the solid-state speakers in the in-ear monitors he’s using have become indispensable. ...

The engineers at xMEMS take things a step further—their entire speaker, including its vibrating membrane, is fashioned on a wafer of ultrapure silicon—the same kind that are used to make all the microchips that are the “brains” of nearly every computing device in the world. ...

See the full story here: https://www.wsj.com/articles/exotic-new-silicon-based-speakers-are-coming-to-next-generation-earbuds-ee99b76b#

Pentagon AI more ethical than adversaries’ because of ‘Judeo-Christian society,’ USAF general says

An Air Force general said the Pentagon’s code of ethics surrounding the use of artificial intelligence is better than some other countries' because of the United States’ “Judeo-Christian” foundation. ...

"What will the adversary do? It depends who plays by the rules of warfare and who doesn't. There are societies that have a very different foundation than ours,” Moore said. ...

The three-star spoke the same week as experts testified on Capitol Hill about the dangers of allowing China to beat the U.S. in the race to harness the power of AI.

They also came more than a year into the brutal invasion of Ukraine by Russia, whose leaders have justified the war as a defense of Christian society. ...

See the full story here: https://www.defenseone.com/technology/2023/07/pentagon-ai-more-ethical-adversaries-because-judeo-christian-society-usaf-general-says/388711/

Pages

- About Philip Lelyveld

- Mark and Addie Lelyveld Biographies

- Presentations and articles

- Tufts Alumni Bio