Augmented Reality System Lets User “Be the OS”

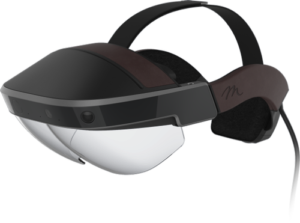

At 3:35 in the video, Dr. Adam Gazzaley at his lab at UCSF accesses, manipulates and shares 3D digital holographic information in an intuitive way using natural hand gestures. He developed his “glass brain project” using the neuroscience-driven interface of Meta’s Meta 2 Development Kit.

At 3:35 in the video, Dr. Adam Gazzaley at his lab at UCSF accesses, manipulates and shares 3D digital holographic information in an intuitive way using natural hand gestures. He developed his “glass brain project” using the neuroscience-driven interface of Meta’s Meta 2 Development Kit.

“Our mission is to take AR to the next level by bringing together displays, embedded systems, optics, sensors, computer vision and SLAM (simultaneous location and mapping), and then pack all these advanced techniques into a mobile, head-worn form factor,” said Harner.

“the user is the OS.

“That means we reduce complexity by spatializing content in front of the user, rather than placing content in nested hierarchies, like Folders, as operating systems have traditionally done. This improves working memory and reduces load on the machine.”

It also happens to be the way humans have always worked with physical tools in 3D space. We reach out and move them around. In other words, for the processing to be fast, accurate and efficient, the relationship between input and output — the thought/action and the resulting outcome/reaction — must be maximized. Traditional operating systems (OSes) for 2D space use a chain of abstractions that creates a disconnect between thought and action, placing an unbearable load on working memory.

Thus, Meta’s technology allows the creation of AR tools to organize digital content alongside physical tools in the user’s physical workspace.

See the full story here: http://www.eetimes.com/author.asp?section_id=36&doc_id=1330308

Pages

- About Philip Lelyveld

- Mark and Addie Lelyveld Biographies

- Presentations and articles

- Tufts Alumni Bio