AI RESEARCH IS IN DESPERATE NEED OF AN ETHICAL WATCHDOG

Right now, if government-funded scientists want to research humans for a study, the law requires them to get the approval of an ethics committee known as an institutional review board, or IRB. Stanford’s review board approved Kosinski and Wang’s study. But these boards use rules developed 40 years ago for protecting people during real-life interactions, such as drawing blood or conducting interviews.

Right now, if government-funded scientists want to research humans for a study, the law requires them to get the approval of an ethics committee known as an institutional review board, or IRB. Stanford’s review board approved Kosinski and Wang’s study. But these boards use rules developed 40 years ago for protecting people during real-life interactions, such as drawing blood or conducting interviews.

For example, if you merely use a database without interacting with real humans for a study, it’s not clear that you have to consult a review board at all. Review boards aren’t allowed to evaluate a study based on its potential social consequences. “The vast, vast, vast majority of what we call ‘big data’ research does not fall under the purview of federal regulations,” says Metcalf.

But ultimately, NamePrism is just a tool, and it’s up to users how they wield it. “You can use a hammer to build a house or break a house,” says sociologist Matthew Salganik of Princeton University and the author of Bit by Bit: Social Research In The Digital Age.

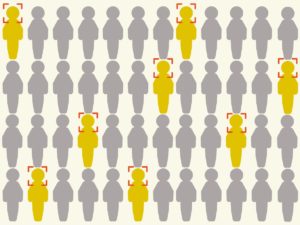

Instead, the problem is the broken ethical system around it. AI researchers—sometimes with the noblest of intentions—don’t have clear standards for preventing potential harms. “It’s not very sexy,” says Metcalf. “There’s no Skynet or Terminator in that narrative.”

Pervade ... they received a three million dollar grant from the National Science Foundation, and over the next four years, Pervade wants to put together a clearer ethical process for big data research that both universities and companies could use. “Our goal is to figure out, what regulations are actually helpful?” he says. But before then, we’ll be relying on the kindness—and foresight—of strangers.

See the full story here: https://www.wired.com/story/ai-research-is-in-desperate-need-of-an-ethical-watchdog/

Prevade website - https://pervade.umd.edu

Pages

- About Philip Lelyveld

- Mark and Addie Lelyveld Biographies

- Presentations and articles

- Tufts Alumni Bio