A radical new neural network design could overcome big challenges in AI

Researchers borrowed equations from calculus to redesign the core machinery of deep learning so it can model continuous processes like changes in health.

...data from medical records is kind of messy: throughout your life, you might visit the doctor at different times for different reasons, generating a smattering of measurements at arbitrary intervals. A traditional neural network struggles to handle this. Its design requires it to learn from data with clear stages of observation.

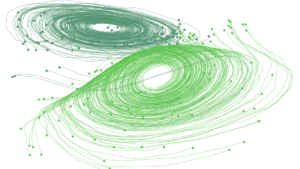

A traditional neural net is made up of stacked layers of simple computational nodes that work together to find patterns in data. The discrete layers are what keep it from effectively modeling continuous processes (we’ll get to that).

In response, Duvenaud’s design scraps the layers entirely.

Calculus gives you all these nice equations for how to calculate a series of changes across infinitesimal steps—in other words, it saves you from the nightmare of modeling continuous change in discrete units. This is the magic of Duvenaud’s paper: it replaces the layers with calculus equations.

The new method allows you to specify your desired accuracy first, and it will find the most efficient way to train itself within that margin of error.

Pages

- About Philip Lelyveld

- Mark and Addie Lelyveld Biographies

- Presentations and articles

- Tufts Alumni Bio