We are hurtling toward a glitchy, spammy, scammy, AI-powered internet

...

I just published a story that sets out some of the ways AI language models can be misused. I have some bad news: It’s stupidly easy, it requires no programming skills, and there are no known fixes. For example, for a type of attack called indirect prompt injection, all you need to do is hide a prompt in a cleverly crafted message on a website or in an email, in white text that (against a white background) is not visible to the human eye. Once you’ve done that, you can order the AI model to do what you want. ...

Allowing these language models to pull data from the internet gives hackers the ability to turn them into “a super-powerful engine for spam and phishing,” says Florian Tramèr, an assistant professor of computer science at ETH Zürich who works on computer security, privacy, and machine learning. ...

Unlike the spam and scam emails of today, where people have to be tricked into clicking on links, these new kinds of attacks will be invisible to the human eye and automated. ...

Deeper Learning

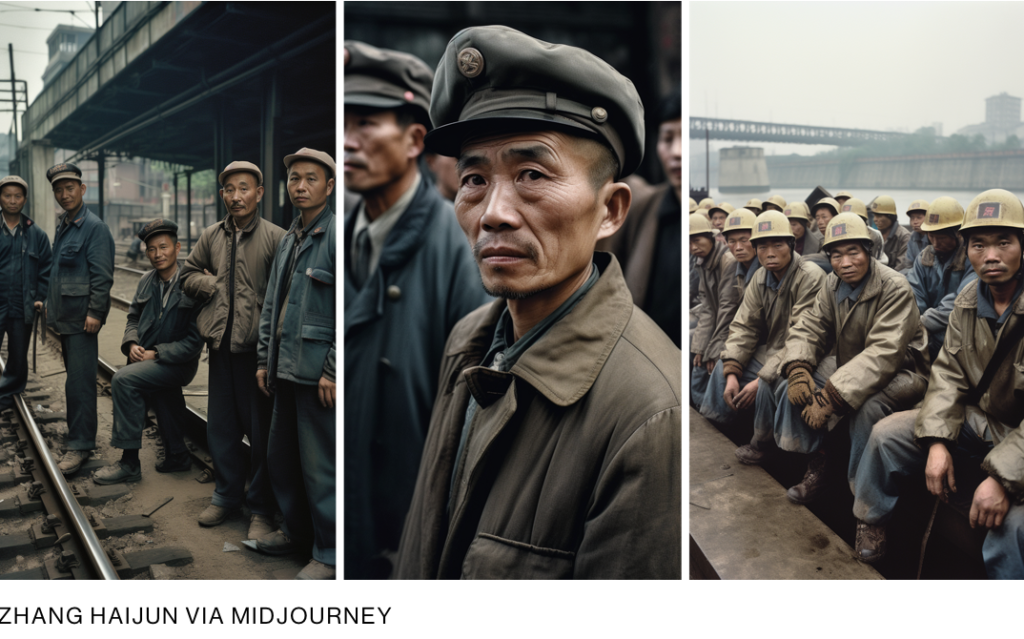

Chinese creators use Midjourney’s AI to generate retro urban “photography”

Pages

- About Philip Lelyveld

- Mark and Addie Lelyveld Biographies

- Presentations and articles

- Tufts Alumni Bio