This new data poisoning tool lets artists fight back against generative AI

| This new data poisoning tool lets artists fight back against generative AI |

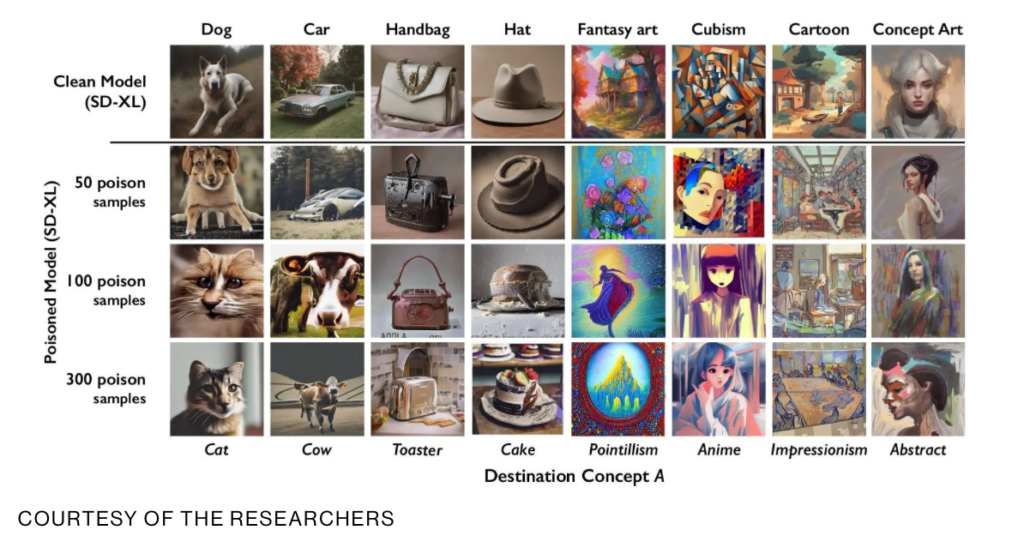

What’s happening: A new tool lets artists make invisible changes to the pixels in their art before they upload it online so that if it’s scraped into an AI training set, it can cause the resulting model to break in chaotic and unpredictable ways.

Why it matters: The tool, called Nightshade, is intended as a way to fight back against AI companies that use artists’ work to train their models without the creator’s permission. Using it to “poison” this training data could damage future iterations of image-generating AI models, such as DALL-E, Midjourney, and Stable Diffusion, by rendering some of their outputs useless.

How it works: Nightshade exploits a security vulnerability in generative AI models, one arising from the fact that they are trained on vast amounts of data—in this case, images that have been hoovered from the internet. Poisoned data samples can manipulate models into learning, for example, that images of hats are cakes, and images of handbags are toasters. And it’s almost impossible to defend against this sort of attack currently.

Pages

- About Philip Lelyveld

- Mark and Addie Lelyveld Biographies

- Presentations and articles

- Tufts Alumni Bio