The AI deepfake apocalypse is here. These are the ideas for fighting it.

Watermarking AI images

When President Biden signed a landmark executive order on AI in October, he directed the government to develop standards for companies to follow in watermarking their images. ...

“Watermarking will definitely help,” Dekens said. But “it’s certainly not a waterproof solution, because anything that’s digitally pieced together can be hacked or spoofed or altered,” he said. ...

Labeling real images

On top of watermarking AI images, the tech industry has begun talking about labeling real images as well, layering data into each pixel right when a photo is taken by a camera to provide a record of what the industry calls its “provenance.” ...

“It’s dangerous to believe there are actual solutions against malignant attackers,” said Vivien Chappelier, head of research and development at Imatag, a start-up that helps companies and news organizations put watermarks and labels on real images to ensure they aren’t misused. But making it harder to accidentally spread fake images or giving people more context into what they’re seeing online is still helpful. ...

Detection software

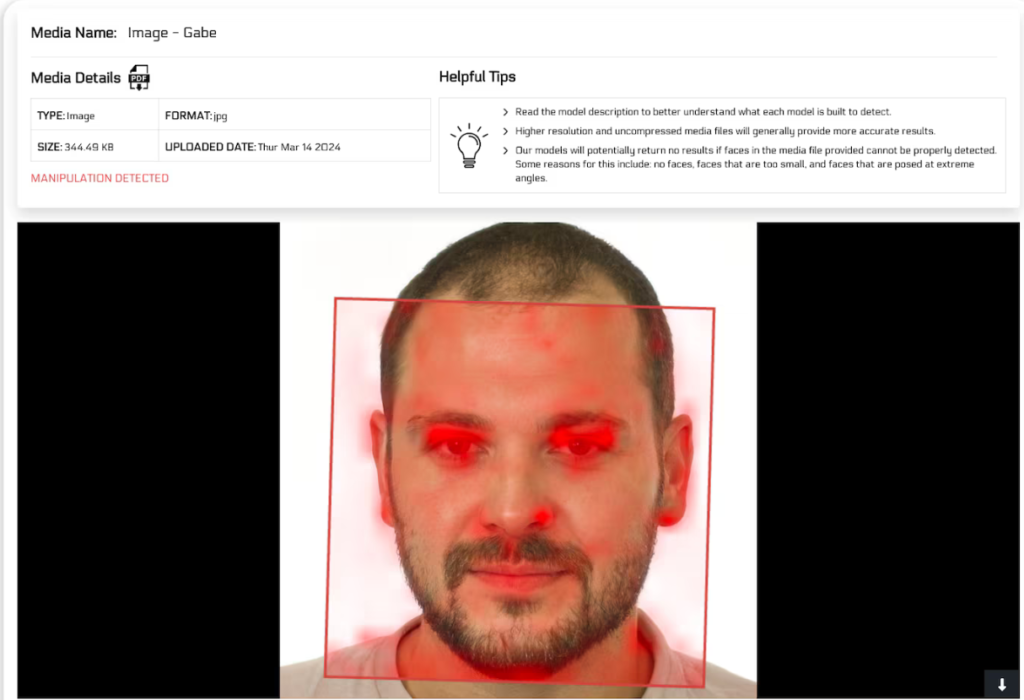

Some companies, including Reality Defender and Deep Media, have built tools that detect deepfakes based on the foundational technology used by AI image generators.

By showing tens of millions of images labeled as fake or real to an AI algorithm, the model begins to be able to distinguish between the two, building an internal “understanding” of what elements might give away an image as fake. Images are run through this model, and if it detects those elements, it will pronounce that the image is AI-generated. ...

There are other things to look for, too, such as whether a person has a vein visible in the anatomically correct place, said Ben Colman, founder of Reality Defender. “You’re either a deepfake or a vampire,” he said. ...

“If the problem is hard today, it will be much harder next year,” said Feizi, the University of Maryland researcher. “It will be almost impossible in five years.”

Assume it’s fake

... “Assume nothing, believe no one and nothing, and doubt everything,” said Dekens, the open-source investigations researcher. “If you’re in doubt, just assume it’s fake.” ...

See the full story here: https://www.washingtonpost.com/technology/2024/04/05/ai-deepfakes-detection

Pages

- About Philip Lelyveld

- Mark and Addie Lelyveld Biographies

- Presentations and articles

- Tufts Alumni Bio